Consequence Scanning for AI Procurement and Adoption: Play Testing!

Do you work in or with local government, creating or purchasing AI tools and services? Come along to one of our free workshops in June to help test a new version of Consequence Scanning and learn practical techniques for assessing the impacts of AI.

Careful Industries is collaborating with procurement expert Dr Albert Sanchez-Graells and the Bristol Digital Futures Institute to create a version of Consequence Scanning designed to support local authorities that are buying, creating, and adopting AI tools and services, and we need your help.

What is Consequence Scanning?

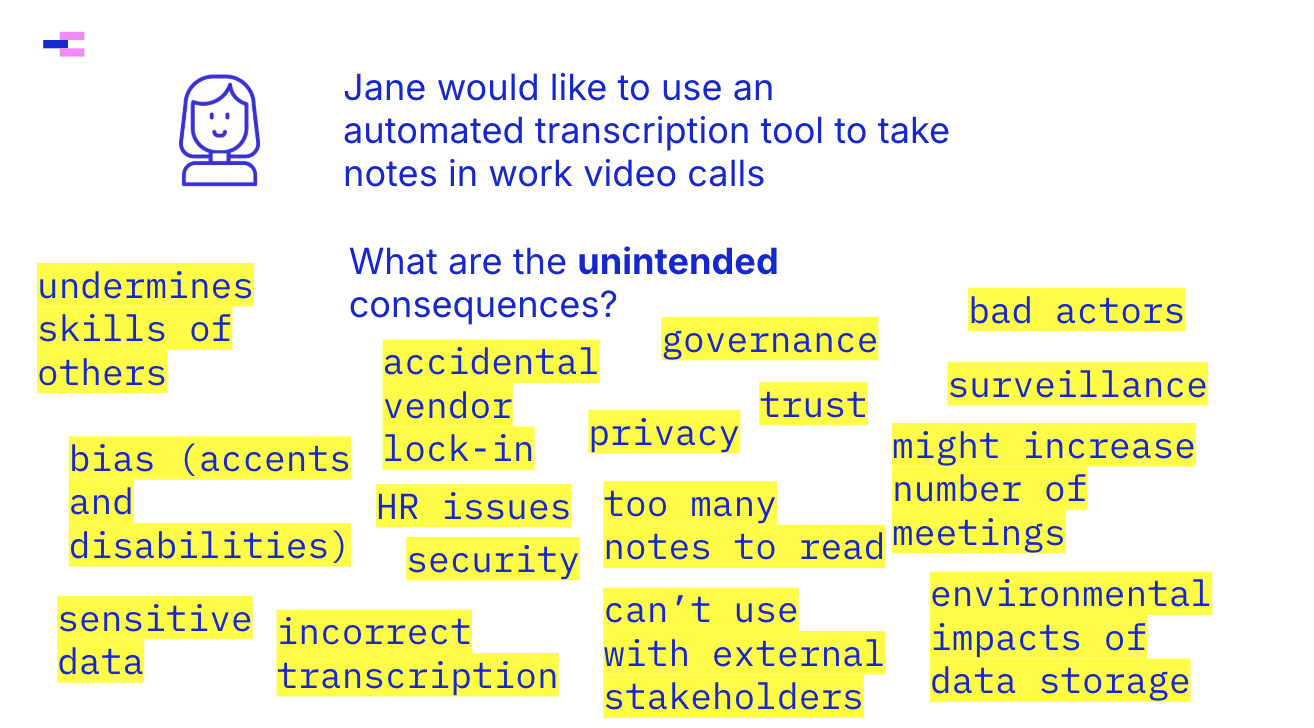

Consequence Scanning is a workshop format that helps small teams play through the implementation and roll-out of a new product or service and create a practical risk register to guide further development and maintenance.

Through a series of prompts and questions, the format helps digital teams and leaders apply their knowledge and expertise so they can better anticipate the social, environmental, legal, and technical risks of a product or service, leading to better planning and decision-making and lower-risk deployment of new technologies.

Since we shared Consequence Scanning at Doteveryone in 2019, it’s been adopted by public services and corporate teams all over the world – and it’s time to update it, to reflect the challenges and dilemmas that come with creating, purchasing, and adopting AI.

At Careful Industries, we’ve been using a slightly adapted version of Consequence Scanning and as part of the Think Before You B(AI) project have conducted research with experts in local government to find out how an updated tool could support their digital transformation and AI implementation projects. We need your help to see if it works – and in return you’ll learn practical techniques for assessing the social, ethical, and environmental impacts of AI.

Consequence Scanning for Public Sector AI

Updated to reflect contemporary concerns and challenges with adopting AI, the new format supports clearer goal setting, and it starts by helping teams get specific about what terms like “efficiency” and “effectiveness” really mean. What does it really mean to do more with less, and can AI help deliver that?

It then offers a guided set of prompts to unpack AI-specific impacts for both the public and for workers, broader outcomes for service delivery and maintenance, and the overall social context.

For this playtest we’re going to focus on AI for meeting minutes: what are the pros and cons, and what safeguards and mitigations need to be rolled out to ensure public benefit?

If you work in or with local authorities creating or purchasing AI and would like to join the playtest, please sign up for the first virtual event, from 10am-1130am on 24 June 2025 and we’ll be in touch very soon.