Developing Consequences 2.0

Results from our playtests, and what we learnt developing an updated set of tools “for the AI age”

The original Consequence Scanning toolkit draws on two sets of prompts designed to help participants understand the unforeseen outcomes of a product or service. One set of cards offers up useful information about frequently occurring harms, such as low digital understanding and weak security and monitoring, the other nudges participants into speculation about probable real-world outcomes

Some prompts from the original Doteveryone Consequence Scanning kit

One of the useful things about Consequence Scanning is that it can be used in a wide-range of contexts and can apply at different levels of scale. However, the AI impacts training we do at Careful Industries had shown us that more specific guidance was necessary to support decisions about AI adoption: there are a number of AI-specific concerns, such as the potential for codification of bias and discrimination into a process and the secondary impacts of automation, that need more careful unpacking. Moreover, the research interviews we had undertaken as part of the Think Before You (B)AI project had shown that there were very varying levels of awareness about the social, environmental, and democratic impacts of AI in local authorities – it was not possible to expect everyone to be an expert in this emerging field, so it would be useful to provide some more structured support to guide the process.

Version 1.0

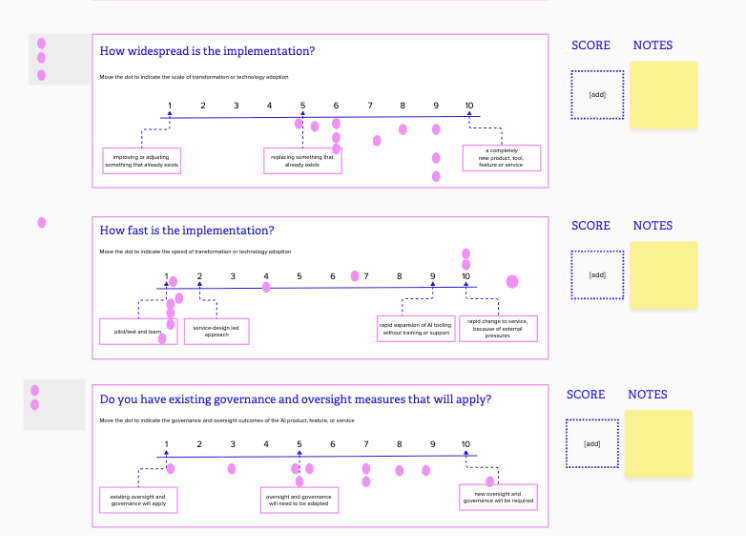

The first version of the updated toolkit set out a very detailed anticipatory framework that offered a series of structured activities for articulating risks and benefits. This started with a detailed section on understanding the benefits and opportunities created by using a product or service and went on to capture some very specific risks and detailed indicators. This version was playtested by people working in a range of roles in local authorities.

While it was recognised that a very detailed approach had its uses, it was too complicated and required a high degree of understanding of both workflows and the impacts of AI. This generated some useful conversations, but it also required very active facilitation and would be best delivered after complementary training on the social, environmental, and democratic impacts of AI. Overall the granularity of the exercises felt more suitable for supporting product design rather than for informing management or strategic decisions about adoption and adaptation.

It was also difficult to get consistent responses to some of the questions: people working in different functions had different perceptions of issues such as scale and complexity.

A screengrab from the first iteration of the new tool

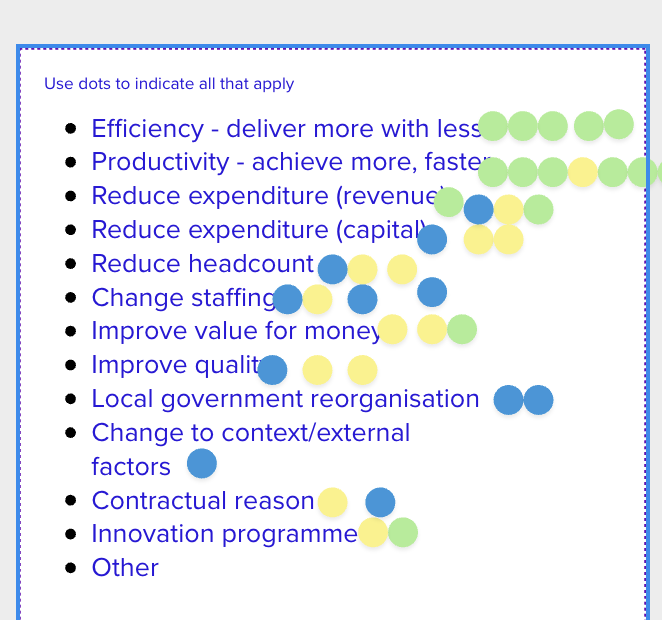

We also found that pre-populating the “benefits” section of the activity led to a “tick all that apply” approach; people found it difficult to focus on a single benefit, as the USP of general-purpose tools tends to be that they deliver multiple benefits at once. While multiple benefits are clearly welcome for any technology, they are difficult to prioritise and we quickly realised it would be more useful to be able to prioritise a small number of significant benefits and understand them in detail. The Consequences approach is all about navigating trade-offs, and to do it is useful to articulate a clear understanding of both benefits and harms.

A screengrab from the first version of the tool, showing dot voting to identify the benefits created by a tool.

The next iteration needed to be much lighter touch so that people taking part could quickly see the wood for the trees and focus on the important and urgent issues, rather than getting distracted.

Version two: Consequences Workshop

This slimmed down version was further iterated through small-group testing with some of Careful Industries’ clients. While the original version of Consequence Scanning created a granular risk assessment, this version – Consequences 2.0 – helps to highlight critical and strategic issues around AI use and adoption, with a focus on building robust plans for mitigations and understanding trade-offs.

This version of the tool still needs additional facilitation and training resources; participants need a basic understanding of some of the impacts of AI, as well as some guidance to navigate the different sections, particularly more detailed trade-offs. However, the workflow is much more compact – in testing, teams were able to conduct assessments in between 45 minutes and one hour, depending on the complexity of the decision being made, but this is still very much a workshop format rather than a “self-serve” offering.

A refined version of the benefits section, from the workshop version of Consequences.

The benefit of the workshop format is that it outputs a fairly granular risk analysis that can help inform decisions about suitability, risks of adoption and ongoing approaches to quality assurance. Rather than simply testing your tool or product against the things it’s meant to do, it provides a way of understanding and monitoring your product’s likely ongoing impacts over time. This is particularly important for general purpose tools, which will have different consequences for different people in different settings.

However, the Consequences Workshop is too complex to deliver the “vibe check” that might be more helpful for teams under pressure to quickly roll out technologies and deliver results. Having tested two iterations, we realised it would also be useful to create something that could be used quickly and easily to support decision-making in team meetings, or be used by one person running through the pros and cons of an AI application.

Version three: Consequences Check

The Consequences Check is a canvas that can be completed by one-person or in a small group. It is not a replacement for a full risk analysis, but the start of a process of enquiry, learning and monitoring that can continue through the lifetime of a product or service. It is designed to answer the following questions:

Is this tool or product the right thing to use in this context?

What obvious things haven’t we thought of?

Is this as a viable short-term solution or a long-term fix?

What urgent risks need monitoring?

The third blog post in this series introduces the Consequences Check in detail. If you would like to learn more about the Consequences Workshop, get in touch at hello@careful.industries